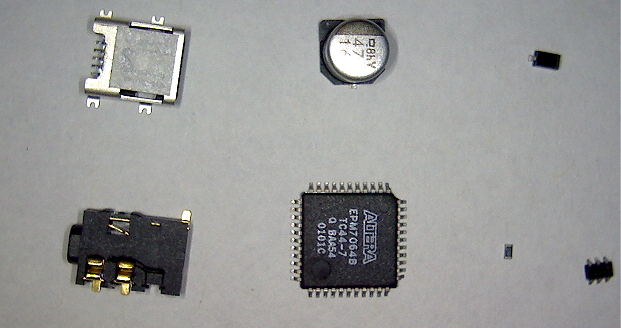

Quick ‘n dirty (but working!) image segmenter for randomly-strewn part identification. About 1 page worth of scripting takes an image of objects on background, determines which part is the background, determines the outside contour of each object and numbers each as a separate object. Now that it’s known where to look for one specific object, the task of identifying that object (or just matching it to another just like it) becomes a whole lot simpler. Combined with the auto-aligner, this reduces a “naive” (bruteforce cross-correlation between needle and haystack images) image matcher to only having to scan against 4 orientations (90-degree rotations) to find which has Pin 1 in the right place (and whether it’s the same part, etc.) Hopefully as I dig deeper into opencv, there is a less-naive algorithm builtin for this that does not rely on contrast/color historgrams: most electronic parts basically consist of a flat black body and shiny reflective metal leads (i.e. appearing the same color as your light source and/or the background, and/or whatever happens to be nearby at the moment). Edge-based stuff still seems like a better approach, though I would welcome being proven wrong if it means not having to write the identifier from scratch myself :-)

Steps in brief:

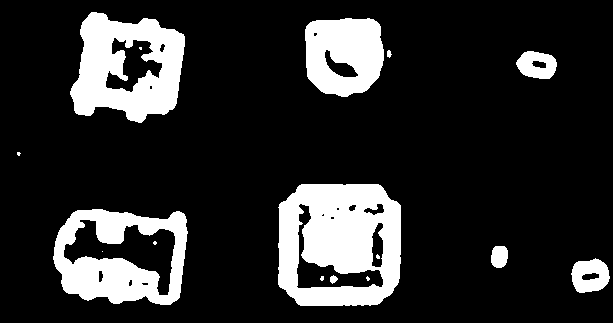

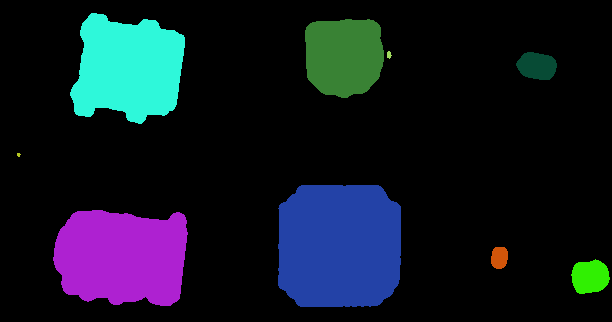

The first image was taken using the actual webcam that will be attached to the pick n place head, looking at a handful of representative parts on a piece of white paper. This image was dumbly processed using a Sobel edge-detector (it’s builtin to Gimp and I was feeling lazy), Gaussian blur to expand the soon-to-be-resulting mask around the part a little and close any gaps in the edge-detection result, and finally threshold the result to produce the second image. The goal in these steps is to produce a closed-form contour blob for each part that’s at least as wide as the part, while minimizing stray blobs from random noise / dirt specs / etc. (internal, fully-enclosed blobs/noise due to part features/markings is OK). Finally, opencv’s FindContours function is run (mode=CV_RETR_EXTERNAL) on the resulting image, returning a vector that contains a polygonal approximation of each external contour found. Each discrete (non-touching) contour blob is returned separately, that is, every part in the frame is now effectively tagged and numbered!

There are a couple noise points identified in the image above. Better-chosen constants for the initial image operations (threshold, blur radius, …) may help, but I’ll probably end up having it measure the area of the blobs and throw away any that’s too small to possibly contain a valid part. Switching to a more advanced edge-detector, e.g. Canny, may help too. In any case, the full image matcher should figure it out eventually :-)

Code Demo – basically ripped straight from the pyopencv examples

Segmentation example – requires Python (2.6) and opencv 2.1.0 / pyopencv.

Leave a Reply